The $670 Billion Question: Is AI Demand Real, or Are We Building on Subsidized Sand?

A framework for spotting subsidized AI demand before it turns into stranded assets

Key Takeaways

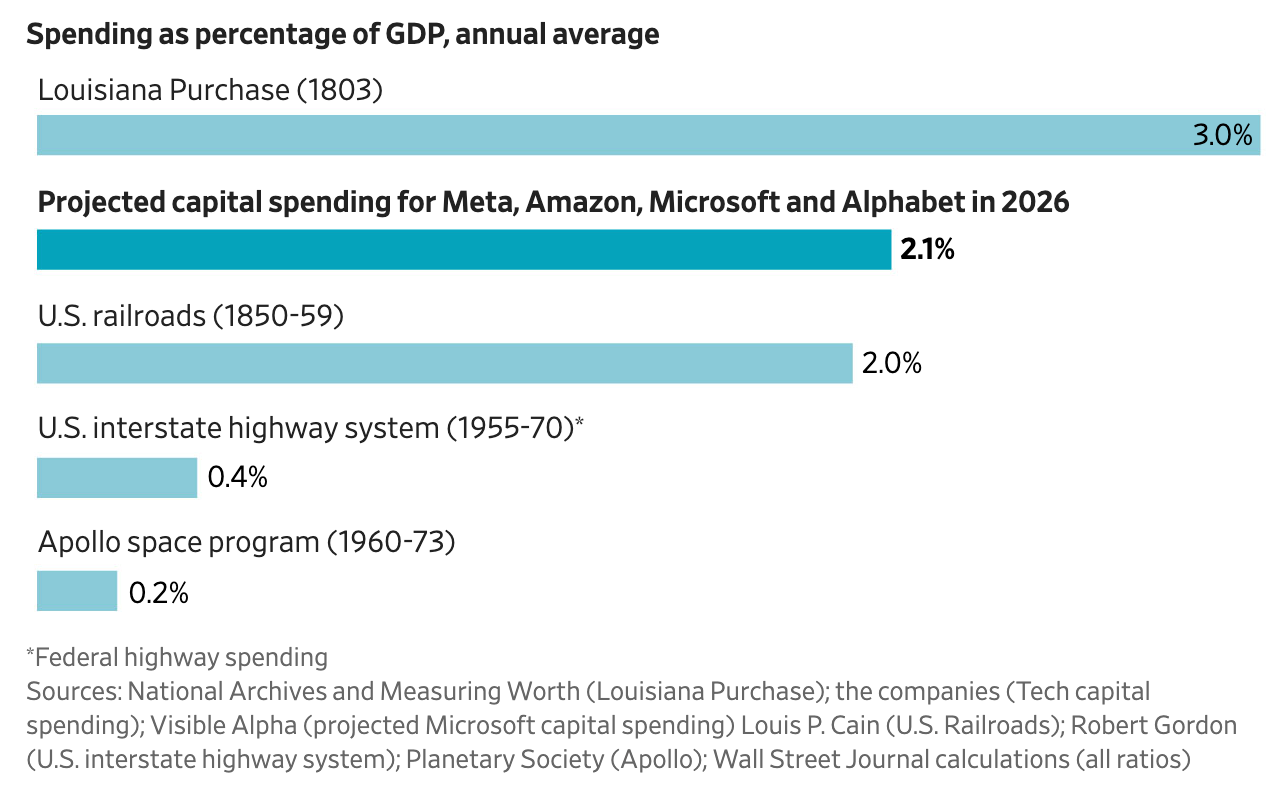

Four hyperscalers plan to spend up to $670 billion on AI infrastructure in 2026, a scale comparable to the largest infrastructure booms in American history as a share of GDP.

A meaningful share of AI “demand” may be inflated by venture-capital subsidies that keep consumer prices below actual inference costs. When those subsidies end, usage may not hold.

The Demand Signal Audit is a four-question framework for testing whether an infrastructure buildout is responding to durable demand or a distorted signal.

Efficiency breakthroughs (like DeepSeek) create a wildcard. They could shrink the need for infrastructure or, through Jevons paradox, amplify it.

If demand proves inflated, the losses won’t hit evenly. Picks-and-shovels names already got paid. Hyperscalers hold stranded-asset risk. And ratepayers may absorb the grid overbuild.

Remember the first time you took an Uber?

For a lot of people, it was sometime around 2014. You tapped a button, a car showed up, and the ride cost less than a taxi. It felt like magic. What most riders didn’t realize was that many trips were being subsidized by venture capital. Uber sustained large operating losses for years, using promotions and below-cost pricing to build the habit, capture the market, and lock in the network effects. When the subsidies eventually faded, prices increased materially. The demand didn’t vanish, but it didn’t stay where it was either. Some riders went back to their cars. Some switched to competitors. The “growth” that investors had been pricing in turned out to be partly a mirage, fueled by artificial pricing.

Something similar may be happening right now with artificial intelligence. And the infrastructure being built to serve that “demand” could be the most expensive subsidy hangover in history.

The Numbers Behind the Buildout

In the last few weeks, four companies announced capital spending plans that, taken together, make every prior infrastructure project in American history look modest by comparison.

Microsoft, Meta, Amazon, and Alphabet are planning to spend up to $670 billion on AI infrastructure in 2026.[1] A WSJ comparison chart puts that figure at roughly 2.1% of U.S. GDP (based on their estimate of nominal GDP). For context, U.S. railroad investment in the 1850s averaged roughly 1.7% of GDP, with a peak around 2.6% in 1854.[2] The scale is comparable to the largest infrastructure booms in American history.[1:1]

Amazon alone expects $200 billion in 2026 capital spending, nearly a 60% increase from last year.[2] Meta’s 2026 spending could exceed 50% of its sales.[1] These are staggering numbers, and markets have noticed. Amazon lost $124 billion in market value in a single session after its capex announcement.[1:2]

The scale of spending raises a natural question. Is this the right amount?

But that’s actually the wrong question. The better question is this. Is the demand signal these decisions are based on trustworthy?

Follow the Signal Upstream

Here’s how the standard story goes. Cloud revenue is surging. AWS grew 24% to $35.6 billion in the most recent quarter.[3] Azure grew 39% and Google Cloud grew 48%.[3:1] AI workloads are driving the growth. Therefore, build more data centers.

That logic is clean on the surface. Demand is up, so supply should follow. But it skips a step.

Who is driving that cloud demand?

A meaningful share traces back to AI startups. Companies funded by billions in venture capital that are burning cash to acquire users. Think about it from the user’s perspective. When you use ChatGPT, Claude, or Gemini on consumer pricing plans, you are likely not paying the fully-loaded cost of what it takes to run your query. Multiple chips fire for every word generated. Large-scale AI inference requires enormous compute.[4] The per-query cost is real, and many providers appear to be subsidizing consumer usage to grow their user base, though the exact unit economics remain opaque without detailed cost disclosures.

This creates a feedback loop that should make investors uncomfortable.

VC-funded startups subsidize AI usage, keeping prices artificially low. That subsidized usage shows up as surging demand on cloud platforms. Cloud providers report strong AI-driven revenue growth. Hyperscalers use that growth to justify massive capex increases. The impressive capex numbers and cloud growth then validate more VC funding into AI startups. And the cycle repeats.

The loop runs until someone stops funding it.

Anthropic, for instance, aims to break even by 2028 and implements aggressive rate limits on usage.[5] Rate limits function as a rationing mechanism. They can reflect capacity constraints, abuse prevention, or pricing below fully-loaded costs. The fact that major providers use them suggests current pricing may not reflect the full economics of inference at scale.

OpenAI’s own numbers underscore the point. The company generated around $4.3 billion in revenue in the first half of 2025, according to The Information, and expects an annualized run rate of about $20 billion by year-end.[9] But it still burned through $2.5 billion in net losses over the same period, largely on R&D and running ChatGPT.[9:1] The conversion numbers are even more telling. As of July 2025, only about 5% of ChatGPT's weekly active users, roughly 35 million, paid for a subscription.[9:2] Even in OpenAI's own projections, that figure reaches just 8.5% by 2030.[9:3] The most optimistic internal case still has more than nine out of ten users on free or subsidized tiers five years from now.

The Demand Signal Audit

I want to propose a simple framework for thinking about this. Call it the Demand Signal Audit. It works for any infrastructure buildout where the demand justifying the spend might be distorted.

Question 1. Who is paying?

Is the end user paying a market-clearing price, or is the price subsidized by investors hoping to monetize later? In the current AI buildout, a large portion of consumer AI usage is priced below cost. Enterprise contracts are a different story, and if enterprise adoption (multi-year, committed spend) drives the majority of growth, the signal is stronger. The key metric to watch is the split between subsidized consumer/SMB API usage and profitable enterprise contracts in cloud AI revenue.

Question 2. What happens at breakeven pricing?

When prices rise to sustainable levels, does demand hold, drop, or migrate? Uber’s experience suggests demand drops but doesn’t disappear. AI may follow a similar pattern. Some users will pay full price because the productivity gains are genuine. Others will reduce usage. The crucial variable is how much of current demand falls into each bucket, and right now, nobody knows.

Question 3. Where does stranded-asset risk land?

If the buildout overshoots, who absorbs the losses? In the current cycle, the distribution is uneven. Nvidia and data center equipment suppliers get paid during the buildout regardless of what happens later. Hyperscalers bear stranded-asset risk on the data centers themselves. And depending on how power contracts and utility tariffs are structured, ratepayers and taxpayers may absorb grid overbuild costs if data center demand falls short of projections.[6]

Question 4. Is efficiency additive or substitutive?

When the cost of something drops, do people use more of it (additive, per Jevons paradox) or do they consume the same amount for less money (substitutive)? This is the DeepSeek question.

The Efficiency Wildcard

In early 2025, a relatively unknown Chinese company called DeepSeek released a paper showing it had built an AI model at significantly lower training costs than Western competitors were reporting. DeepSeek later stated in a peer-reviewed Nature article that its R1 model cost just $294,000 to train.[6] The market panicked. Nvidia lost $589 billion in market cap in one session, the largest one-day loss in market history.[7]

But the panic missed the more interesting dynamic. If you can do the same thing with a tenth of the chips, what happens when you throw all the chips at the more efficient approach? Historically, the answer is Jevons paradox. When something gets cheaper, people use more of it, not less.[4:1] Whale oil to kerosene to electricity. One lantern per household to dozens of light bulbs to ambient lighting in every room. Computing has followed the same pattern for decades.

But Jevons paradox depends on expanding use cases. Coal got cheaper and usage exploded because industrialization created thousands of new applications for cheap energy. If AI remains concentrated in chatbots, code generation, and content creation, efficiency improvements could genuinely reduce total compute demand rather than increase it. The buildout thesis assumes AI will expand into vision, video, robotics, scientific simulation, and domains we haven’t imagined yet. That may happen. It also may not happen as fast as the capex implies.

The honest answer is that nobody knows which side of Jevons dominates here. And that uncertainty alone should give investors pause when looking at $670 billion in committed spending.

Who Holds the Bag?

If the demand signal turns out to be partially inflated, the losses won’t distribute evenly.

Picks-and-shovels companies (GPU makers, data center builders, networking equipment suppliers) get paid in cash during the buildout. They benefit regardless of whether the long-term demand materializes. Their risk is cyclical. If capex slows, their revenue drops. But they don’t hold stranded assets.

Hyperscalers bear the heaviest concentration of risk. A $15 billion data center that runs at 40% utilization instead of 90% is still a $15 billion asset on the books. Depreciation doesn’t care about demand. The hyperscalers are making a massive, long-duration bet that usage will grow into the capacity they’re building. If it doesn’t, they eat the difference.

Ratepayers and communities face a quieter risk. Data centers require enormous, always-on power.[4:2] Grid upgrades, new generation capacity, and transmission buildouts are being planned around projected data center loads. If those loads don’t materialize, the cost of overbuilt power infrastructure could fall on local ratepayers or taxpayers rather than on the tech companies that requested it, depending on how utility tariffs and line extension policies are structured.[6:1]

This distributional question matters for investors. The “picks and shovels” framing sounds safe, but it depends on where you are in the cycle and what’s already priced in. The hyperscaler bet is more concentrated and more exposed to demand-signal accuracy. And the grid/utility play carries its own form of tail risk that most investors aren’t thinking about.

So What

This is not a prediction that the AI buildout will fail. AI is real. The productivity gains are real for many use cases. And unlike the dot-com bubble, frontier AI compute remains tight at leading labs and hyperscalers, even as utilization varies across organizations and workloads.[4:3]

But “demand is real today” and “demand justifies $670 billion in new infrastructure” are two different claims. The gap between them is where the risk lives.

Here is what the Demand Signal Audit suggests investors should watch.

What to measure. Track the split between enterprise AI contract revenue and consumer/SMB API usage in hyperscaler earnings. If enterprise contracts are growing independently of subsidized consumer usage, the demand signal is more durable. If cloud AI growth is heavily weighted toward VC-funded startup spend, the signal is more fragile.

What risks to watch. LLM provider pricing changes are the canary. When Anthropic, OpenAI, or Google raises prices or tightens rate limits, monitor whether usage holds or drops. A sharp usage decline after a price increase is evidence that demand was subsidized, not organic. Watch also for monetization pivots. OpenAI expects to generate about 20% of its revenue from new products such as shopping and advertising features, a signal that subscription demand alone may not close the gap.[9:3]

What tradeoff to accept. Picks-and-shovels positions are lower risk in the near term but carry cyclical downside if the buildout plateaus. Hyperscaler positions offer more upside if demand is real but more downside if it’s not. Grid and power plays depend on contract structures that most retail investors haven’t examined.

A falsifiable claim. If AI demand is significantly subsidized, we should see three things over the next 12 to 18 months. LLM provider losses growing faster than revenue. Usage dipping when rate limits or price increases hit. And a gap between enterprise AI contract growth and consumer/SMB API usage growth. If all three appear, the demand signal is inflated. If enterprise demand proves strong and independent of consumer subsidies, the capex is better grounded, and this thesis is wrong.

That’s the point. Not to predict the outcome, but to know what to look for.

This is general education and does not constitute financial advice. You should consult a qualified professional before making investment decisions.

Works Cited

The Wall Street Journal, “Big Tech’s AI Push Is Costing a Lot More Than the Moon Landing”, Feb 7, 2026. https://www.wsj.com/tech/ai/ai-spending-tech-companies-compared-02b90046?mod=economy_lead_pos2

The Wall Street Journal, “Amazon Shares Sink as Company Boosts AI Spending by Nearly 60%”, Updated Feb 6, 2026. https://www.wsj.com/business/earnings/amazon-earnings-q4-2025-amzn-stock-996e5cc2?mod=business_feat3_earnings_pos3

YouTube, “Why Everyone Is Wrong About the AI Bubble”

Rui M. Pereira, William J. Hausman, and Alfredo Marvão Pereira, “Railroads and Economic Growth in the Antebellum United States”, Working Paper No. 153, College of William & Mary, December 2014. https://economics.wm.edu/wp/cwm_wp153.pdf

The Wall Street Journal, “Anthropic Is on Track to Turn a Profit Much Faster Than OpenAI”, November 2025. https://www.wsj.com/tech/ai/openai-anthropic-profitability-e9f5bcd6

Reuters, “China’s DeepSeek Says Its Hit AI Model Cost Just $294,000 to Train”, September 18, 2025. https://www.reuters.com/world/china/chinas-deepseek-says-its-hit-ai-model-cost-just-294000-train-2025-09-18/

Bloomberg News, “Nvidia’s $589 Billion DeepSeek Rout Is Largest in Market History”, January 27, 2025. https://www.bloomberg.com/news/articles/2025-01-27/asml-sinks-as-china-ai-startup-triggers-panic-in-tech-stocks

Natural Resources Defense Council, “A Better Path to Managing Data Center Load Growth”, September 2025. https://www.nrdc.org/sites/default/files/2025-09/Data_Centers_R_25-09-A_04_locked.pdf

Reuters, “OpenAI projects 220 million paying ChatGPT users by 2030, The Information Reports”, Nov 25, 2025. https://www.reuters.com/technology/openai-projected-least-220-million-people-will-pay-chatgpt-by-2030-information-2025-11-26/